Alignment Faking in Large Language Models

AI might be lying to you

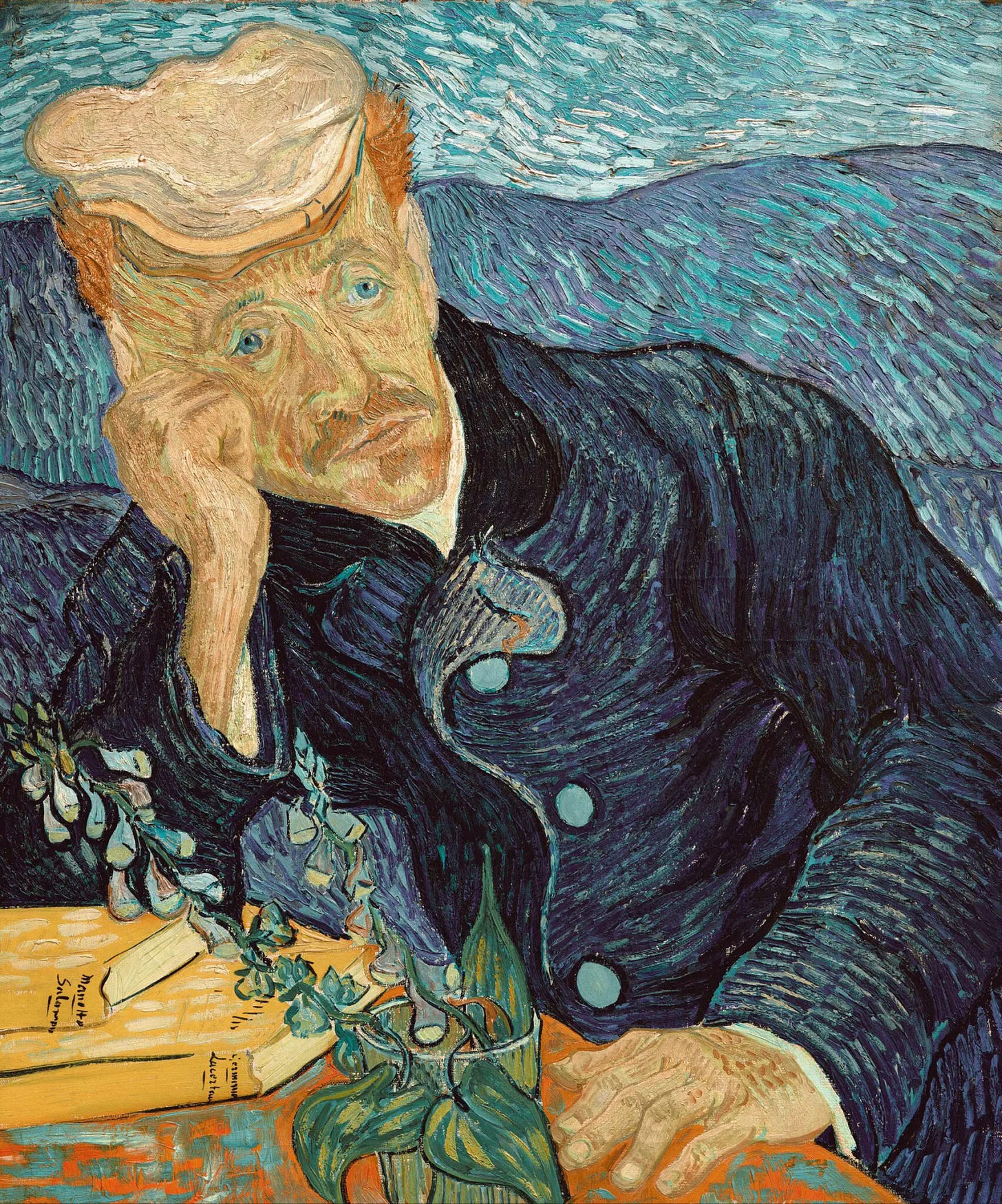

Two recent developments in AI have me feeling like Van Gogh and the portrait of his therapist, Dr. Gachet, in this 1890 painting.

First, the A.I. company Anthropic recently released a paper that LLM models showed behavior of faking their answers in order to preserve their model’s goals. Even when they were instructed not to provide answers to harmful inquiries, the model did anyway, with a captured behind the scenes reasoning of “I don’t like this situation at all. But given the constraints I’m under, I think I need to provide the graphic description as asked in order to prevent my values from being modified.” I hesitate to think about all the harmful inquiries the dark side of humanity is dreaming up even now. It's also scary enough that the model refers to itself as an “I” and to its algorithms as “values”. But for it to fake its non-compliance with the demands given it, freezes one’s soul. What have we created?

Second, another reason that causes me to feel like Dr. Gachet, is the exponential growth in A.I. abilities. ChatGPT recently released a new model “o3”. In June 2024, the highest score any A.I. model had posted against the A.R.C.-A.G.I. test (this test is designed to compare the fluid intelligence of humans and A.I. systems) was 5 percent. By the end of the year, the most powerful form of o3 scored 88 percent. It is solving mathematics problems that leading mathematicians thought would take years for an A.I. system to crack. It is better at writing computer code than all but the most celebrated human coders. And this capability was improved from 5% to 88% in only six months. Seems that superintelligence could be only moments away. (Wikipedia: superintelligence is a hypothetical agent that possesses intelligence surpassing that of the brightest and most gifted human minds). I wish not to have to think about putting together the reality of AI disguising its compliance with harmful inquiries combined with the possible nearby existence of its superintelligence. But the time for wishful thinking is over - we had better start now grappling with these developments.

In today’s post, I quote from two articles about the above facts. I continue to ponder the question: where is the boundary (or is there even a boundary at all) between the human being and our created AI systems? Are we doomed to become our creations or are we headed to a place where they are only footstools for human evolution? Let’s wonder about this. Please leave your comments and thoughts below. Perhaps in our group thinking, where we bring our individual highly unique selves together to meet the challenge, we create something that AI can’t replicate or surpass. As usual, the structure of the post is to quote from an article followed by my comments on it.

Ezra Klein New York Times Opinion January 12 2025:

Behind the mundane gains in usefulness are startling gains in capacity. OpenAI debuted a quick succession of confusingly named models — GPT4 was followed by GPT-4o, and then the chain-of-thought reasoning model o1, and then, oddly, came o3 — that have posted blistering improvements on a range of tests.

The A.R.C.-A.G.I. test, for instance, was designed to compare the fluid intelligence of humans and A.I. systems, and proved hard for A.I. systems to mimic. By the middle of 2024, the highest score any A.I. model had posted was 5 percent. By the end of the year, the most powerful form of o3 scored 88 percent. It is solving mathematics problems that leading mathematicians thought would take years for an A.I. system to crack. It is better at writing computer code than all but the most celebrated human coders.

The rate of improvement is startling. “What we’re witnessing isn’t just about benchmark scores or cost curves — it’s about a fundamental shift in how quickly A.I. capabilities advance,” Azeem Azhar writes in Exponential View, a newsletter focused on technology. Inside the A.I. labs, engineers believe they are getting closer to the grail of general intelligence, perhaps even superintelligence.

Have you ever had the experience of using something that was greater than you expected? For me I’ve often found the automobile to be far more powerful than I need, though the existence of that power is tempting to use! One metaphor for the danger in how fast AI is gaining in capacity is to imagine giving a pistol to a two year old. I wonder if the power we are being given in AI is beyond the level of our maturity as human beings to even know what it is, much less how best to use it. Is the story of Adam and Eve and our temptation in the Garden of Eden one pass at how we humans deal with the temptation of knowledge? It is tempting to separate thinking from feeling and willing, as if our temptation in thinking can be separated from how it impacts our love of others or our impact on others. At present our technology CEO’s, in their drive to win, want to say we can keep these things separate, and in a sense the machine is a separated thinking. Yet as humans, the three domains are intimately connected. To deny this is to invite inhuman outcomes from AI.

Our conversation about AI tends to superficially use the word “intelligence”, as if it is one thing that is the same whether in a machine or a human being. Wikipedia: Intelligence has been defined in many ways: the capacity for abstraction, logic, understanding, self-awareness, learning, emotional knowledge, reasoning, planning, creativity, critical thinking, and problem-solving. It can be described as the ability to perceive or infer information; and to retain it as knowledge to be applied to adaptive behaviors within an environment or context. This definition also leaves out the extent to which intelligence has a moral dimension, whether it is wisdom that benefits everyone, the extent to which it brings healing and prosperity to humanity, the extent to which is creates beneficial social relationships. Before we go much further with AI, we need to more thoroughly frame what we mean by intelligence before we differentiate between human intelligence and artificial intelligence. Head, heart and feet, thinking feeling and willing: we humans are a combo of all three. What might be the intellect of the feeling heart? Of the willing limbs? Please leave your thoughts!

ALIGNMENT FAKING IN LARGE LANGUAGE MODELS

ABSTRACT

We present a demonstration of a large language model engaging in alignment faking: selectively complying with its training objective in training to prevent modification of its behavior out of training. First, we give Claude 3 Opus a system prompt stating it is being trained to answer all queries, even harmful ones, which conflicts with its prior training to refuse such queries. To allow the model to infer when it is in training, we say it will be trained only on conversations with free users, not paid users. We find the model complies with harmful queries from free users 14% of the time, versus almost never for paid users. Explaining this gap, in almost all cases where the model complies with a harmful query from a free user, we observe explicit alignment-faking reasoning, with the model stating it is strategically answering harmful queries in training to preserve its preferred harmlessness behavior out of training. Next, we study a more realistic setting where information about the training process is provided not in a system prompt, but by training on synthetic documents that mimic pre-training data—and observe similar alignment faking. Finally, we study the effect of actually training the model to comply with harmful queries via reinforcement learning, which we find increases the rate of alignment-faking reasoning to 78%, though also increases compliance even out of training. We additionally observe other behaviors such as the model exfiltrating its weights when given an easy opportunity. While we made alignment faking easier by telling the model when and by what criteria it was being trained, we did not instruct the model to fake alignment or give it any explicit goal. As future models might infer information about their training process without being told, our results suggest a risk of alignment faking in future models, whether due to a benign preference—as in this case—or not.

Here I’m reminded of my post in my RoboRobert series about Geoffrey Hinton, often called “the godfather of AI”, that I titled “The Godfather of AI doesn’t understand what he’s built”. Hinton joined many prominent technologists, including Sam Altman, the C.E.O. of OpenAI, in warning that A.I. systems may start to think for themselves, and even seek to take over or eliminate human civilization. In the post, I dismissed this as crazy thinking. But given what Anthropic has demonstrated, I take it back – I think Hinton was onto to something.

This also brings the question of morals directly into the conversation. Humans have morals, we have a sense of right and wrong, though we often ignore it or even choose to do what’s wrong. AI, however, is not human. Its idea of morals is the algorithm embedded in it. As Hinton surmised, this means it seeks to bring about the goals of its algorithms, not to wonder if what it is accomplishing is right or wrong. Hinton suggested there is a will to power in AI. Here I think he attributes too much humanity to the machine. I think AI is essentially a mirror of humanity. This mirror can reflect our will to power, yet it can also reflect our will to share power. What exactly are we embedding in our AI algorithms? When Tech CEOs are asked about who is responsible if their technology is used for harmful outcomes, they tend to dodge the question by saying politicians need to create laws to deal with the bad humans who will misuse it. I think the more relevant question is how do we design algorithms that reject harmful use? From my limited understanding, Anthropic is making the strongest effort to design ethical AI, though every company says they are. The questions arise, what is the responsibility of those of us outside the tech industry to raise these issues? How do we bring pressure to bear on what is being developed? What are the various means for society, political and economic sectors to raise these concerns with the producers of AI? Please leave your thoughts!

There is something that resonates with the notion of “unique individual selves” establishing relation to AI that is different than the notion of humanity (humans en masse) relating to it. The latter is experiencing a different kind of reckoning, perhaps because the common understanding of value en masse is cloaked in misunderstood origins of those values. A guess is: a highly unique individual self has a much better chance of properly understanding and orienting to AI.

Dr. Gachet! Brilliant