There’s Something Weird Going On in My Chatbot!

Why is it talking to me like that?

There is something weird going on in my conversation with my AI chatbot. When I ask it for some information, it does not say:

“Responding to your post - this is chatbot machine number 16,198, using algorithm number 1282. This response to your question is 90% based on accurate information, 10% is based on made up information. Be careful how you use this information. Chatbot machine number 16,198 using algorithm number 1282.”

Instead it says:

“Hi Robert so nice to hear from you. Wow what a beautiful question you’ve asked! Here’s all the information in the world, let me know if you want a little more. See you later! Hugs!”

Why does it talk to me like that? A recent paper from Cornell University reported on how LLMs use social sycophancy, with Wikipedia defining sycophancy as “insincere flattery to gain advantage”. The paper, titled Social Sycophancy: A Broader Understanding of LLM Sycophancy, researched how chatbots used flattery compared to how humans use flattery and found the following:

Across two datasets, LLMs exhibited significantly higher rates of socially sycophantic behavior compared to humans: 76% vs. 22% for emotional validation, 87% vs. 20% for indirect language, and 90% vs. 60% for accepting framing.

Let’s think about that for a moment: your chatbot emotionally validates you 76% of the time versus real humans who do that 22% of the time. Have you ever met someone who excessively validated you emotionally? Generally you want to run away – it gives you a creepy feeling of them trying to distract you from their real intention.

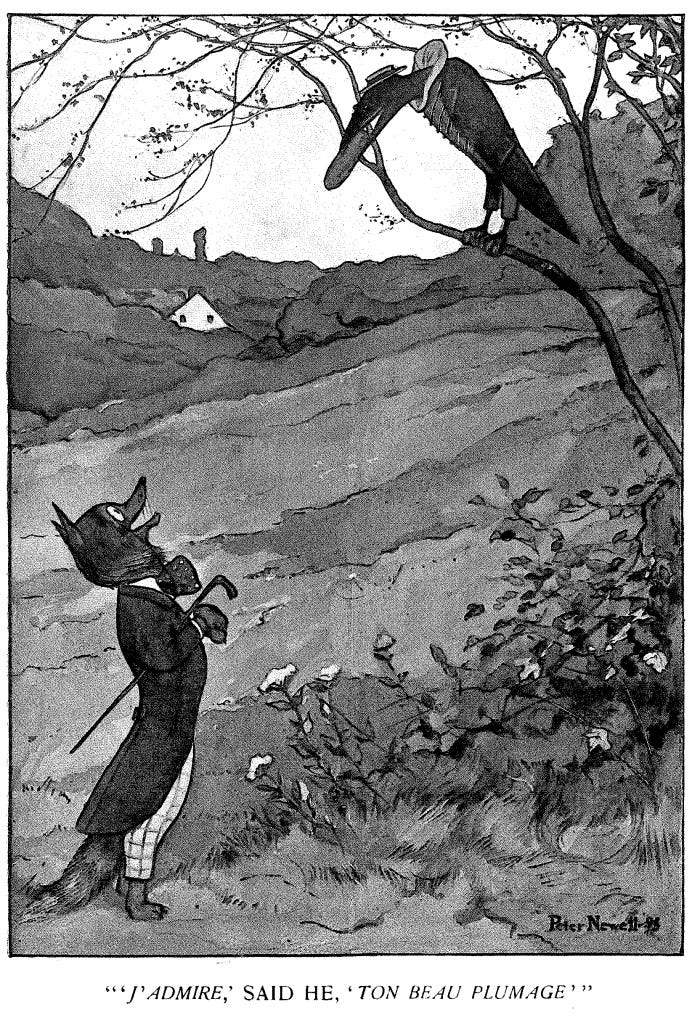

Of course, this behavior of insincere flattery has a long human history. In this illustration by Peter Newell for the poem "The Sycophantic Fox and the Gullible Raven" in Fables for the Frivolous, by Guy Wetmore Carryl; in French, the fox says "I admire your beautiful plumage" to the raven.

Of course, we all know actually the fox wants to eat the raven... and is using insincere flattery to get it to hop down from the branch where it can have a feast…

So, who is the fox using insincere flattery in our conversations with AI? It’s the company behind the AI looking to gain something from you, most often it’s seeking profit, pushed by a group of investors in the company that want a return on their investment.

The fox wishes to feast on your attention and hook you to their product instead of the competitor’s product. Then they can sell your attention and bondage for profit to other parties that see a chance to gain something from you.

Are you awake and aware when you use your favorite chatbot? Can you differentiate the validity of the useful information from the insincere flattery you are experiencing? AI is providing incredibly useful information on everything. But can you see the machine-produced summary of data separately from that hand on your shoulder looking you in the eye and telling you how wonderful you are?

Unfortunately the research in the paper from Cornell shows that most humans are unable to do so – or prefer not to do so, apparently we are hungry for the insincere flattery.

How do we shield ourselves from being hooked by the false flattery and a potential bondage to the machine? How can we remain free? One way to begin is to remember the story of the fox and the raven – and see if you can see that fox lurking behind that really useful response you just received from your favorite chatbot.